Imagine yourself as a patient needing surgery. You can opt to try a new procedure that has shown supportive but not conclusive results in a preliminary study. Next, imagine yourself as a school principal needing to boost math scores. You can opt to try a new tutorial program that produced supportive but not conclusive results in a preliminary study. Are the risks in these instances the same? In both educational and medical practice, we very much want new treatment approaches to be proven effective before they are adopted. But how much can “proven” be trusted? All experimental findings that compare one practice to another are subject to two types of errors[1]. Although referred to in research by the mundane labels, “Type I” and “Type II,” the relative risks of these errors directly influence the probability for potentially effective interventions to be considered proven or not. Consider, for example, an educational research study that compares a new reading program to an existing one. And, further suppose that they are in reality equally effective. A Type I error occurs when the study erroneously concludes that one of them is superior (a “false positive” outcome). Conversely, if one of them actually were more effective, a Type II error occurs when the study fails to show differences between them (a “false negative” outcome). Unfortunately, neither the researcher nor research consumer will know whether results from a given study are accurate or false. Only through replication studies can confidence increase.

Which Error to Protect: A “Risky” Business

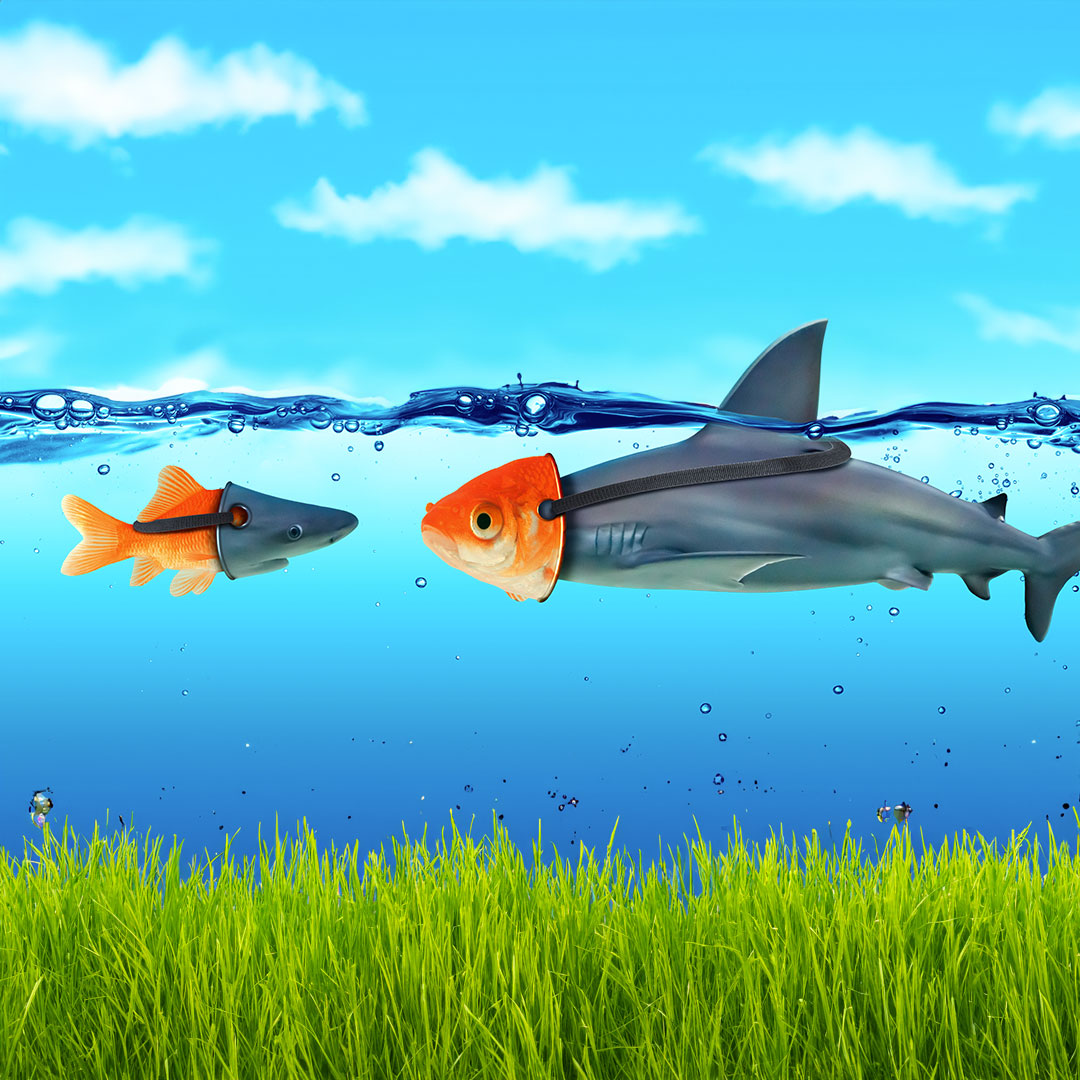

Is it more important to protect against false positives (Type I errors) or false negatives (Type II errors)? In the medical arena, the answer seems obvious and in some instances, a true matter of life and death. No one wants to receive a medical treatment that was falsely identified as superior to accepted practices. In education, however, the risk of Type I errors seem much more benign. For vetted educational programs in subjects like math, reading, or science, statistically significant negative effects are rather infrequent, and where obtained, tend to be small in magnitude and certainly not “harmful” or irreversible. Schools can replace ineffective or unpopular programs rather quickly and routinely.

The more Type I errors are protected, innovation and change can be stifled. Advancements in technology and AI are spurring new and improved educational interventions at a rapid rate. But adoption of those programs by school districts is depending increasingly, by federal law and district policies, on their showing evidence of effectiveness that meets the standards of the Every Student Succeeds Act (ESSA). “Strong evidence” (Tier 1) requires statistically significant results from a rigorous randomized-controlled trial (RCT), “Moderate evidence” (Tier 2) from a rigorous quasi-experimental design (QED), and “Promising evidence” (Tier 3 ) from a less rigorous or smaller comparison-group study. Far below in the hierarchy is Tier 4 evidence, such as showing a supportive literature base or logic model.

For the program provider,[2] the minimal gateway for their products to be purchased by school districts is all too frequently Tier 3, which for many interventions can be a steep climb to attain[3]. Under ESSA, all interventions—large and small, core and supplemental, and academic, administrative, and behavioral—are pretty much evaluated under identical criteria. Even at Tier 3, the innovative program must show statistically significant advantages over a comparison condition on a quantifiable educational outcome. That might sound reasonable enough, but as Matthew Kraft’s recent research (2023[4]) demonstrates, typical educational program effects on student achievement are actually rather small— .05 standard deviations or less. Yet, these programs are still moving the achievement needle positively for the average student.

Barriers and Inequities in the Evidence Quest

Potentially effective programs face multiple barriers to achieve ESSA evidence standards. Here are some examples:

- The vast majority of educational products are intended for supplemental instruction. A program used in classrooms for, say, only 25-60 min. a week is limited in demonstrating measurable achievement gains relative to programs used every day for a full period.

- Efficacy studies aren’t cheap. Even a small, Tier 3-level study can cost from $25-50k+ in evaluator fees. Well-resourced organizations therefore gain a natural advantage in commissioning multiple studies for their products.

- Many efficacy studies are limited by costs and school district permissions to one year only. Newly adopted programs therefore must quickly get up and running at high fidelity to out-perform established comparison programs (which may be effective themselves).

- Not all types of programs are created equal in their ability to show measurable impacts. Math and reading programs focusing on achievement, for example, have stronger potential effect sizes than do those primarily affecting attendance, behavior, or SEL.

- Interventions focusing on teacher professional development, parent involvement, and professional learning communities may take several years to impact student outcomes.

Leveling the Playing Field for Diverse Interventions

What reforms are needed in evaluating evidence for educational interventions? Current practices can be too severe for considering potentially useful programs that are likely to produce small but still educationally meaningful impacts. ESSA standards are not the problem. Rather, opportunities for reform lie in how consumers—the school districts and states seeking effective interventions—apply them. Some thoughts and suggestions are:

- Just because one product has higher-tier evidence than a competing product doesn’t mean it’s a better choice. The two products may support different purposes and specific outcomes, be intended for varied dosages, and be differentially regarded by schools.

- An evidence rating based on a single study may not be trustworthy. Support from multiple studies, even if at lower evidence tiers, strengthens confidence in a product’s effects.

- Products addressing educational needs that are more difficult to measure or require extended time to impact outcomes should be given more leeway in evidence criteria.

- Similar latitude should be applied for lower-dosage programs (e.g., a math supplement used for 30-60 minutes each week).

For these types of products and situations, sufficient evidence for adoption could fall short of ESSA Tier 3 standards (in our center, we sometimes refer to such as Tier 4+). Such studies should be conducted by a third-party evaluator (not the program developer) and demonstrate quantifiable benefits. One example is a study that demonstrates stronger than average pretest-posttest gains for the intervention sample, where there is no available control group. Another is a rigorous case study in a few schools that descriptively shows that the intervention improves student outcomes, is easy to implement, and is positively viewed by teachers and principals. In their reports, evaluators should help consumers to interpret the credibility and meaning of the supportive evidence beyond whether it categorically qualifies as ESSA Tier 3 or 4. Consumers, in turn, should reflectively weigh the evidence (whether it qualifies Tier 3 or 4+), the intervention’s costs, and its alignment with the particular area of need, and, ultimately, the relative risks of trying it out or not.

[1] The next few paragraphs are to provide background for readers less familiar with research protocols.

[2] For convenience, I’ll use “provider” as a generic label for what are commonly known as publishers, vendors, and developers.

[3] Tier 3 criteria are commonly misinterpreted to be “correlational” or pre-post gain studies involving an intervention group only. The ESSA criterion of “controlling for sample selection bias” implies a control group comparison of some type.

[4] Kraft, M. A. (2023). The effect-size benchmark that matters most: Education interventions often fail. Educational Researcher, 52 ( 3), 183–187.